and80% of companies see zero impact on their bottom line from GenAI. However, the winners are getting $3.71 back for every dollar spent (on average).

The truth is harsh: the biggest challenges of generative AI software development are human, not technical. While your team debates Claude vs GPT-4, the real problems are hiding in conference rooms and org charts.

Let me show you the three challenges killing GenAI projects and the fixes to help you get the ball rolling.

Generative AI Software: The Biggest Challenges and How to Overcome Them

Challenge 1: Your Teams Are Fighting Each Other

Your biggest GenAI threat is the war happening inside your company. 68% of executives say GenAI created tension between IT and business teams:

- 72% develop AI apps in silos.

- Only 45% of employees think GenAI is working (while 75% of executives think it’s excellent).

Here’s what’s happening. Marketing sees GenAI as its escape from IT bureaucracy. They spin up shadow projects and bypass your security protocols. IT pushes back with governance requirements that business units see as roadblocks.

The Vicious Cycle:

Marketing bypasses IT → IT creates more restrictions → Business units rebel → Compliance troubles multiply. As a result, your C-suite believes you’re winning while your teams know you’re failing.

How to Fix It

Make teams share the pain. Don’t create committees. Build teams where IT and business success are tied together. For example, both teams take the hit if a marketing chatbot fails.

When everyone’s bonus depends on the same outcomes:

- Customer satisfaction scores

- Processing time reductions

- Error rates

- Silos disappear fast

Meet weekly, not monthly. Steal from Agile. Short meetings where teams share what they’re building, what’s blocked, and what help they need. Make it routine, not a PowerPoint parade.

Challenge 2: You’re Flying Blind in Production

Only 27% review AI-generated content before customers see it. That means 73% push AI outputs live without human oversight.

You’re not just risking destructive content. Attackers now exploit AI systems to steal data and break into applications. Your attack surface exploded, and most companies haven’t noticed.

The governance gap problem:

You can’t hire enough people → Manual reviews don’t scale → Quarterly audits catch problems too late → Damage is already done. You need company-wide guardrails, but manual governance doesn’t scale.

How to Fix It

Automate governance in real-time. Use systems that monitor AI interactions as they happen. Don’t wait for quarterly audits to discover that your AI shared proprietary code or made biased hiring decisions.

Create review tiers based on risk. Not all AI outputs need the same oversight:

- High Risk (Human Review Required): Customer-facing content, financial recommendations, legal documents

- Medium Risk (Automated Checking): Internal documentation, marketing drafts, training materials

- Low Risk (Peer Review): Code suggestions, internal tools, development workflows

Monitor continuously with alerts. Watch for unusual data access patterns, outputs that trigger filters, or performance drops. When alerts fire, someone acts within hours, not days.

Challenge 3: Your Strategy Is Broken

Companies without a formal AI strategy see 37% success rates. Companies with a plan have an approx 80% success rate. But most AI strategies are garbage, with buzzwords that do not address real things.

The coming skills crisis: By 2027, 80% of software engineers will need major upskilling for AI-driven development. Your developers are learning to work alongside AI agents, validate AI code, and manage systems they didn’t build.

Role transformation happening now:

Traditional coding → AI-assisted development → AI agent management → Human oversight of autonomous systems

New “AI engineer” roles focus on managing AI agents instead of writing code from scratch.

How to Fix It

Strategy starts with people, not tech. Before picking models, map how roles change. Who’s responsible when AI code breaks? How do you validate AI recommendations? What happens when your AI agent makes a costly decision?

Upskill with real projects. Don’t send developers to AI bootcamps:

- Give them AI tools to solve actual business problems.

- Pair experienced developers with AI-curious team members.

- Make learning part of delivery, not separate from it.

Build straightforward career paths. Show traditional developers how to become AI engineers. Define success in AI-augmented roles. Show people where their careers go in an AI-first world.

Bonus Scenario

Your legacy systems weren’t built for AI and your databases can’t handle AI data volumes. Your network wasn’t designed for AI API calls. AI outputs have bias, hallucinations, and randomness. Software engineers can’t trust them unquestioningly.

Infrastructure mismatch problems:

- Legacy databases → Can’t handle AI data volumes

- Network architecture → Wasn’t designed for AI API calls

- Integration points → Breaking under AI workload

How to Fix It

Use a hybrid infrastructure. Cloud for AI processing and training, on-premises for sensitive data and legacy integration. Build APIs that can handle the traffic patterns AI agents create.

Try Multiple Models for Different Use Cases:

- Claude works better for code completion

- Gemini handles system design better

- Route tasks to the right model for the job

Integrate gradually with rollback plans. Start with non-critical processes. Build confidence. Expand slowly. Always have a way to turn off AI and fall back to humans when things go wrong.

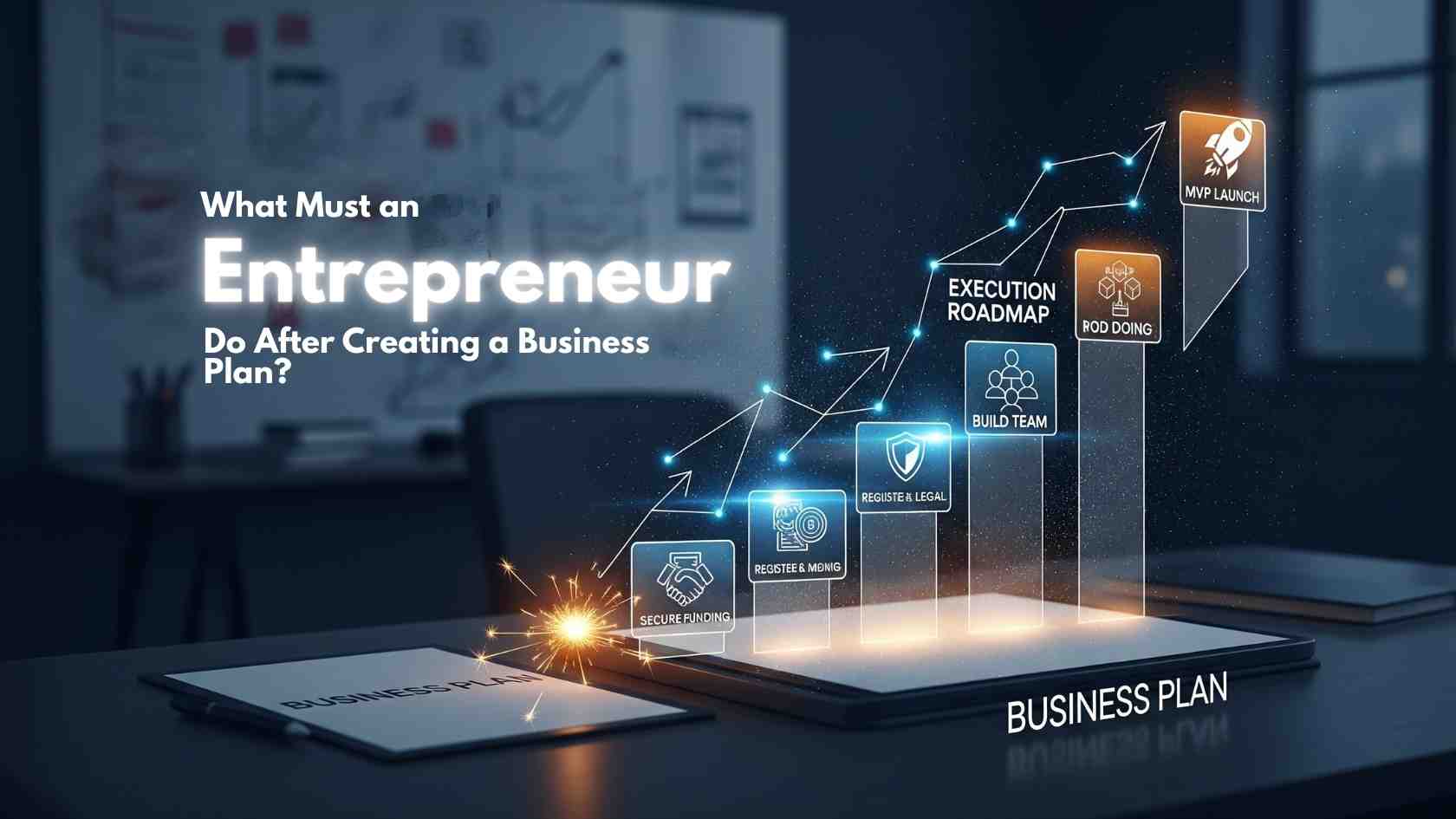

Your Action Plan

The companies winning with GenAI solved human problems first.

This Week:

Find where AI creates organisational tension → Have honest conversations with IT and business leaders → Document what’s broken.

This Month:

Build your first cross-functional AI team → Focus on one specific business problem → Give them shared metrics and authority.

This Quarter:

Set up automated governance → Start with customer-facing apps → Expand to high-risk use cases. GenAI won’t wait for you to figure this out. Companies addressing these challenges now will define their industries in 2025. Those who don’t will explain to boards why their AI investments disappeared into chaos.

Which side will you choose?